Hi, I'm Edward. 👋

This summer, I was one of tonari’s software engineering interns, and one of three members working remotely. My main project was to re-implement and optimize the encoding and decoding portion of tonari's video pipeline — though ultimately my work ended up spanning far more of tonari's codebase, touching both hardware and software. Along the way, I picked up some valuable insights, not just on the technical side but also having to do with balancing life and how tonari values this delicate balance.

My internship was meant to take place in person with the tonari team in Japan, and in the end I unfortunately wasn’t able to go there. A combination of stricter Japanese immigration requirements due to COVID-19 and very specific university policies made the visa application process a bureaucratic nightmare, and I only found out that I couldn't travel two months before my internship was set to begin.

Interning and working remotely is not really new to me at this point; my last internship was also remote despite the company being in my hometown of Chicago due to COVID-19. Despite that, my experience was incredibly different this time around. I'd be working on completely different schedules, with software running on hardware that I'd never seen in person before. And I think my experience at tonari was well worth the challenge.

Onboarding

The onboarding process to the main codebase was incredibly fast compared to what I'd experienced before. The codebase was very well organized, I had a whole structure-drawing session and overview with Ryo (co-founder) and Brian (my mentor), and the setup process was very cleanly documented.

All in all, it probably took about a day or two to get main video and audio components of tonari building and running on my laptop. To familiarize myself with the codebase, I took a stab at a number of easier issues. It was a bit overwhelming at first, but due to how well structured, concise, and modular the codebase was, it was easy to familiarize myself with the whole codebase by the end of my internship.

Time Differences

The onboarding process was also a great time to get acquainted with my new schedule and figure out how to make the most out of it.

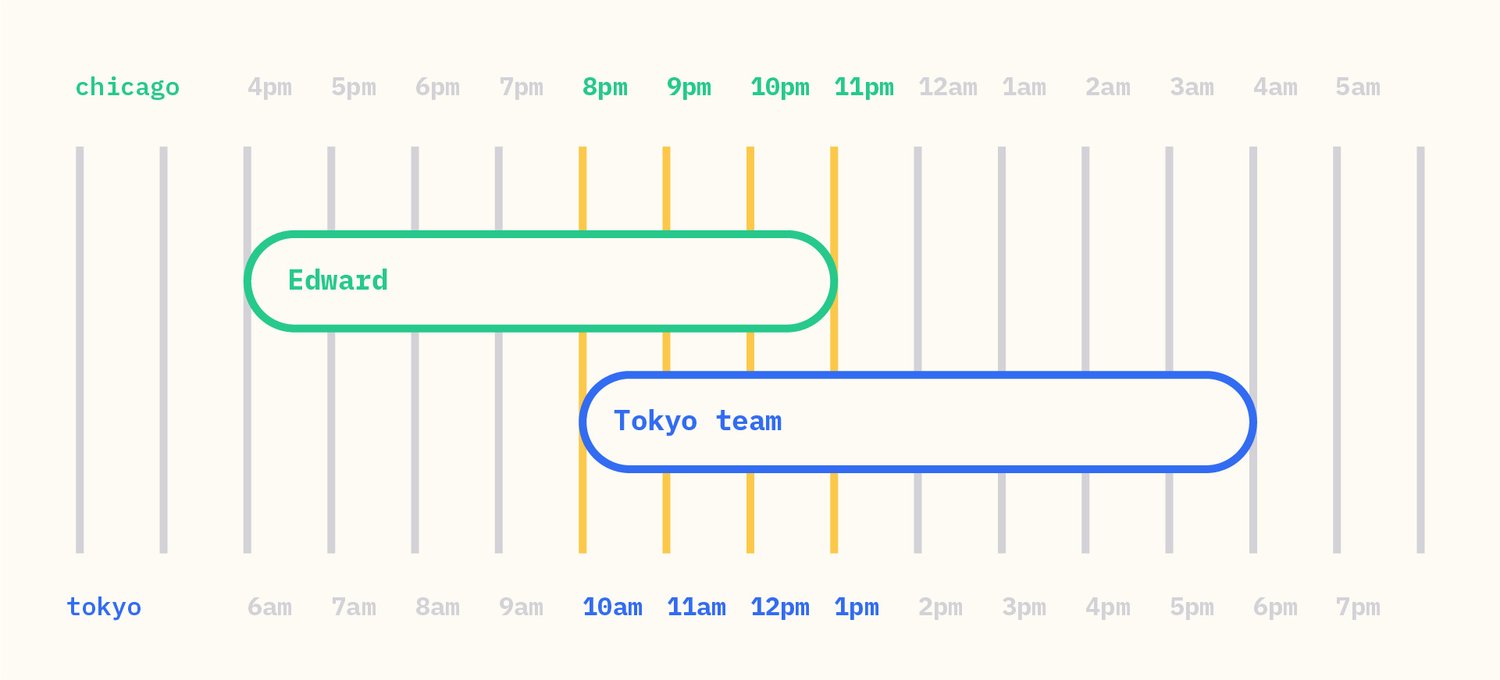

There's a 14 hour time difference between Japan and Chicago. So that we had a greater overlap, I decided to work from 4pm to 11pm (also because I'm a night owl), and so that gave me three hours of overlapping core hours from 8-11pm with the main Tokyo schedule.

I also decided to work from Monday - Friday in US time, which meant that only my Monday - Thursday overlapped with the main Tokyo schedule. On one hand, this meant I had really good focus time on my own for half of the day. On the other hand, working remotely already meant that my time with the team each day was fairly limited. Trying to balance these conflicting needs while making the internship a productive experience was a pretty unique challenge to deal with. I'd say that after a little bit of trial and error, it ended up working pretty well for me.

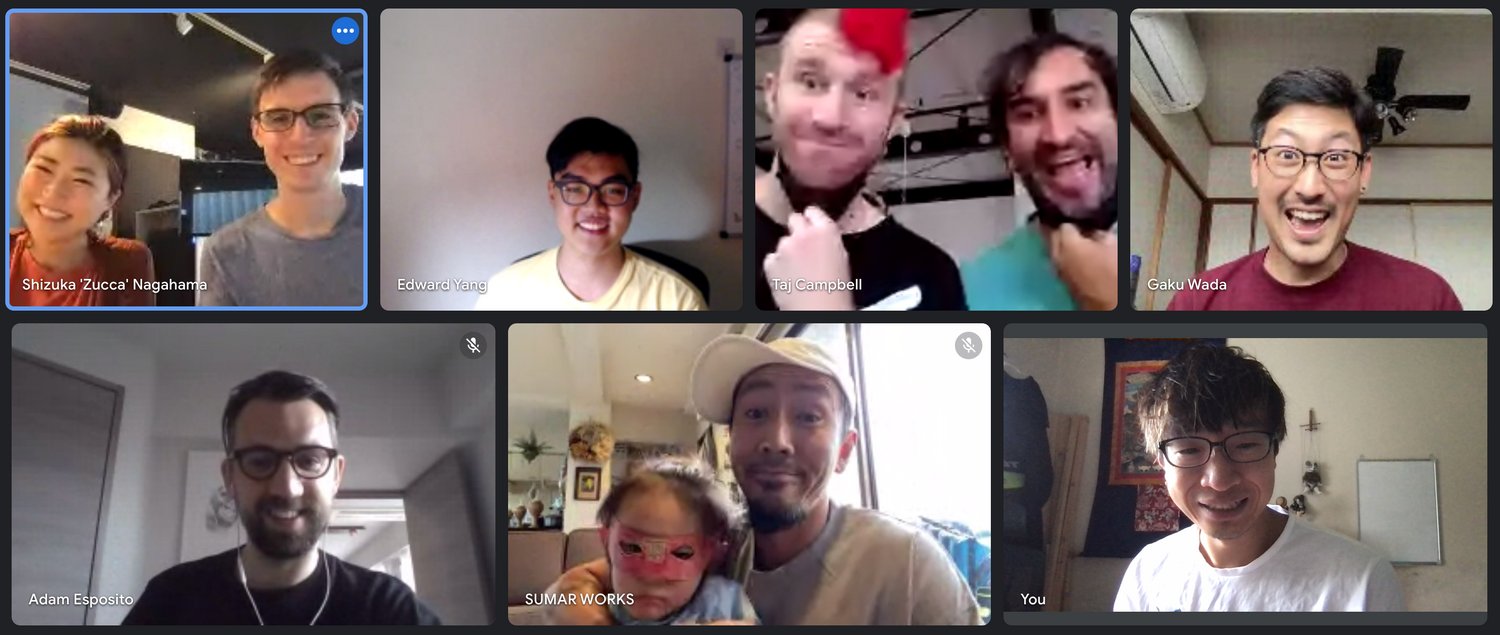

Work-wise, over the course of my internship, I got really good at figuring out what I might need guidance on the next day and asking those questions during my overlapping core hours the day before. For the first couple of hours each day I would work on my own. If I got stuck, I would keep track of my questions and then work on something else. During core hours, I'd sync up with Brian and figure out what I'd had trouble with. For these syncs, we'd use Discord or an always-on Google Meet earlier on in my internship, and later on we used tonari's software stack itself.

Overall, I found that this schedule worked pretty well: I could focus when working on my own and keep a running list of questions, never really getting bogged down on anything for too long. Not only that, but the always-on meeting and especially tonari's software made it feel like I was actually in the office, with all the ambiance and good conversations associated with that. I also got to be the first international usage of tonari, which was super cool to set up and experience. 😊

Main Project

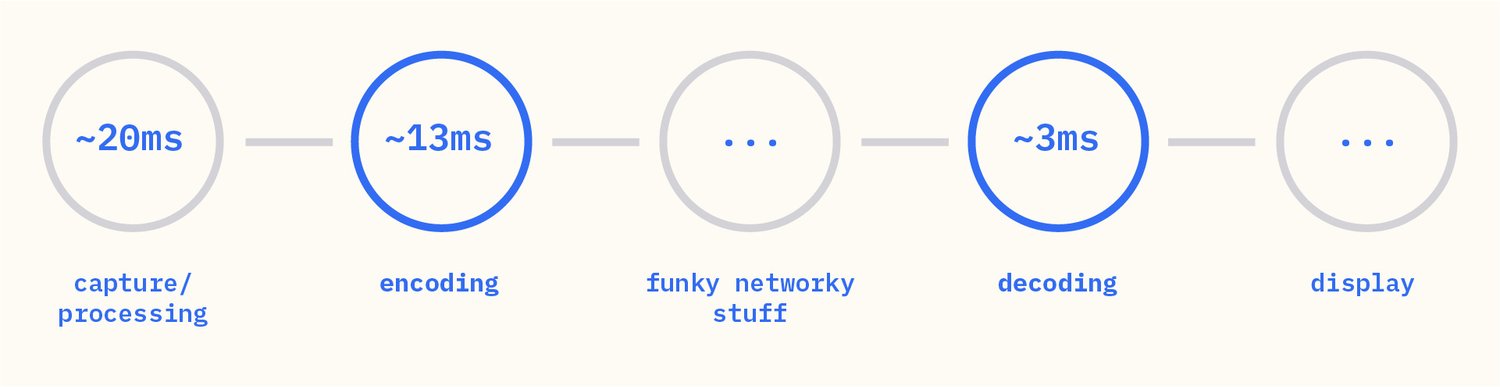

As an intern, my main project was to write a Rust wrapper for the NVIDIA Video Codec SDK. In our current implemention, we wrap NVPipe, which is a C++ layer (since deprecated) written above the Video Codec SDK. tonari's glass-to-glass latency is currently 130 milliseconds (as measured by Jake et al earlier) for the whole pipeline. With our current implementation, the encode-decode steps took around 17 milliseconds total, which is a good chunk of that target latency, and presents as a large area of potential optimization.

Our current pipeline, however, depends on NVPipe to interface with the Video Codec SDK, which has been long since deprecated. This forces us to use a version of the Video Codec SDK that is 2 major versions behind. We have had an issue with this older version's interaction with newer drivers (once leading Ryo on a wild goose chase right before an installation), which is clearly not exactly ideal. More importantly though, newer versions of the Video Codec SDK might provide us with more avenues by which to optimize the time spent on this part of the pipeline. With our own implementation layer, we can remove unnecessary colorspace conversions, reduce memory copies, optimize buffer management, and tune all the parameters for the real-time use case we have. Along with that, directly wrapping the Video Codec SDK with Rust would make it safer to use instead of having to maintain additional C++ glue code that is potentially untested and bug-prone, and may give other Rust developers easy access to the Video Codec SDK in the future as well.

2021-08-10 11:37:42,976 INFO [encoder::encode_multi] Encoded last 500 frames in 2.070845972s, 4.141691ms per frame

2021-08-10 11:37:51,320 INFO [encoder::encode_multi] Encoded last 500 frames in 2.069524838s, 4.139049ms per frame

2021-08-10 11:37:59,664 INFO [encoder::encode_multi] Encoded last 500 frames in 2.070782916s, 4.141565ms per frame

2021-08-10 11:38:08,009 INFO [encoder::encode_multi] Encoded last 500 frames in 2.085806551s, 4.171613ms per frame

2021-08-10 11:38:16,353 INFO [encoder::encode_multi] Encoded last 500 frames in 2.078167702s, 4.156335ms per frame

2021-08-10 11:38:16,366 INFO [encoder::encode_multi] Encoded 5000 frames in 20.734256828s, 4.146851ms per frameAnd so we wrote a new implementation. Not only was this a successful endeavor, but we ended up achieving far more of our performance goals than expected. When testing the new implementation of the encoder with a 3k60fps stream, we managed to achieve a 4 millisecond encode time, only possible because of the newer presets available and direct access to an OpenGL implementation (as opposed to having to convert between CUDA and OpenGL representations). Decode time with the new decoder implementation was measured at 0.5 milliseconds, which is an absolutely incredible result. In total, this should drop around 10 milliseconds off of our total latency once it's integrated into the main codebase!

Porting Gotcha's

Because Rust has good tooling for integration with C/C++ code, rewriting the majority of the NVPipe/Video Codec SDK sample code was surprisingly not too bad. Rust's bindgenbindgen generates aren't very pretty; wrapping C/C++ is unsafe, so it was my responsibility to wrangle the messy generated functions and structures into a safe API for the user.

The hard part of the porting process was really with getting the API calls to actually work safely. There were a couple of gotcha's in the process of porting the decoder that really took a disproportionately long time to debug.

As a brief example, the decoder in the original example application has an internal frame buffer to keep hold of memory for video frames that were both decoded and in the process of decoding. This is an optimization so that additional memory does not have to be reallocated on every new decoded frame. To keep things synchronized, there's a mutex over this frame buffer to allow for multi-threaded decoding. However, the basic method to get a decoded frame in the original implementation actually unlocked that mutex and returned the pointer to that frame, then locked the mutex on return from the function, without taking into account whether the user was done working with that frame. Here's the original function in question:

uint8_t* NvDecoder::GetFrame(...)

{

...

std::lock_guard<std::mutex> lock(m_mtxVPFrame);

return m_vpFrame[m_nDecodedFrameReturned++];

...

}While returning a bare pointer might lead to slight performance gains, this method is also an incredibly easy way to cause a data race. If the user is reading a frame while the decoder is decoding a new frame to that same frame, the user could easily end up reading garbage data. To expose this interface in an idiomatic manner, we decided to use the parking_lotMutexGuard automatically lock and unlock the frame based on when the user was done accessing the frame. This was probably one of the easier fixes we had to deal with.

Other issues mainly had to deal with C/Rust interoperation through FFI (Foreign Functional Interface). With both the encoder and decoder implementations, a common pain point was pointer allocation and memory ownership issues. When creating data structures to pass to Nvidia's APIs, Rust would automatically allocate them on the stack and deallocated them when the function returned, leading to whole host of use-after-free issues with no real way to debug it except a good hunch. To solve this issue, we had to explicitly heap-allocate these data structures so that they outlived the stack, and passed these references to Nvidia's API instead. For example, consider the following snippets of code:

// api.h

typedef struct _IMPORTANTSTUFF {

...

} IMPORTANTSTUFF;

// This function might hold onto data for use later, who knows what the heck it does?

// Treat it like a black box.

extern void doStuff(IMPORTANTSTUFF* data);// api.rs

#[repr(C)]

struct IMPORTANTSTUFF {

...

}

extern "C" {

fn doStuff(data: *mut IMPORTANTSTUFF);

}

// We want to provide a safe wrapper over these rowdy C functions

struct Wrapper {

...

}

impl Wrapper {

fn do_stuff(...) {

...

let mut tmp = IMPORTANTSTUFF {...};

unsafe {

doStuff(&mut tmp);

}

...

}

}In this case, the reference passed to doStuff no longer has any information about tmp's lifetime because it has to throw that out to interface with C code. To ensure that tmp lives long enough, this would be amended to the following, with references specifically held to tmp inside of the Wrapper to ensure that it lived long enough.

// api.rs

#[repr(C)]

struct IMPORTANTSTUFF {

...

}

extern "C" {

fn doStuff(data: *mut IMPORTANTSTUFF);

}

// We want to provide a safe wrapper over these rowdy C functions

struct Wrapper {

...

boxed_tmp_ref: Box<IMPORTANTSTUFF>,

}

impl Wrapper {

fn do_stuff(...) {

...

self.boxed_tmp_ref = Box::new(IMPORTANTSTUFF {...});

unsafe {

doStuff(self.boxed_tmp_ref.as_mut());

}

...

}

}We had a similar issue when dealing with OpenGL. OpenGL calls have to occur within a thread-local OpenGL Context, in this case with wrapped Contexts provided by glutinContext that we needed for the encoder, which led to the Context being de-allocated before the encoder de-allocation code could run. When combined with a case of a stack-allocated pointer like above, this was pretty hard to debug and really held back much progress for multiple days.

Eventually, with the help of Ryo's and Brian's incredible debugging skills, we were able to push through and have working encoder and decoder implementations! In this case, we just had to explicitly hold the Context in our encoder structure and make sure that its deallocation happened after the encoder's deallocation. Hopefully some of our solutions to these issues might give some people stuck on their own FFI projects an idea of what to look for when using Rust to integrate with C via FFI.

Debugging these allocation issues was not a fun process. The encoder and decoder implementations are surprisingly terse and monolithic for what was supposed to be example code. Even worse, I didn't have access to Nvidia's internal source code for the Video Codec SDK (even after being given "access", there was no source). This meant debugging what was wrong could be a guessing game a lot of the time.

Coupled with the fact that the team was busy actively installing new tonari portals at a few different locations during the latter half of my internship, I was sometimes on my own and out of ideas on what to do next. Sometimes I just needed a break from the main project... which ended up being a great opportunity to explore other parts of tonari that I was interested in working on!

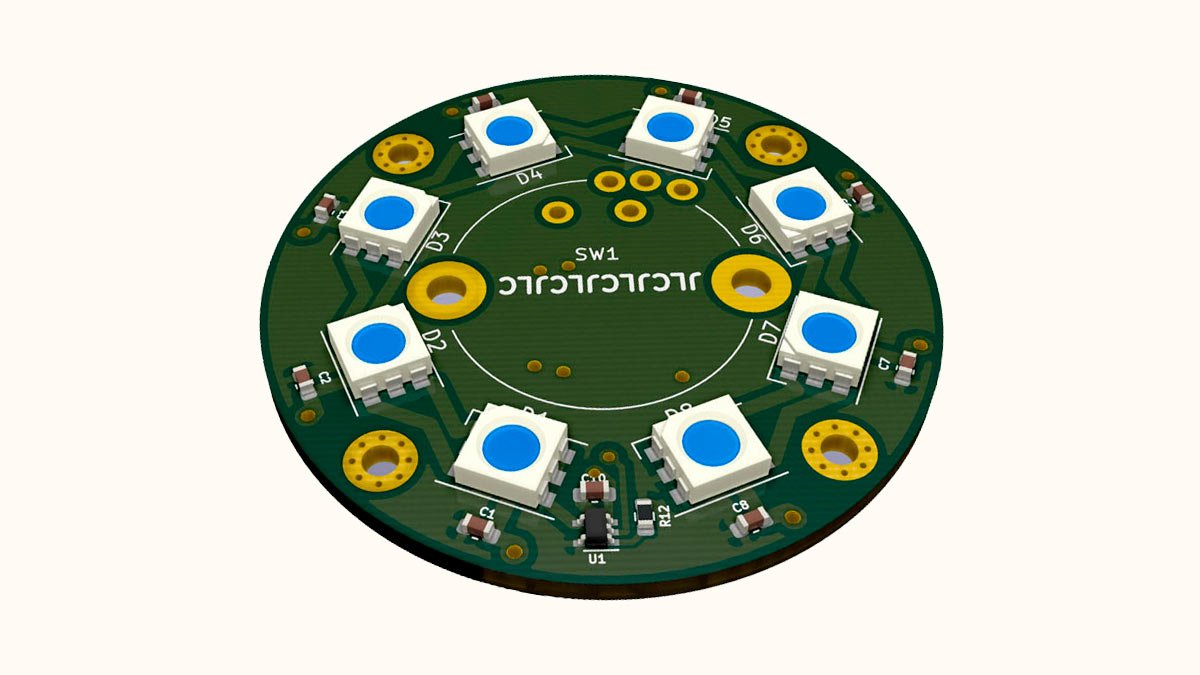

Building and Updating PCBs

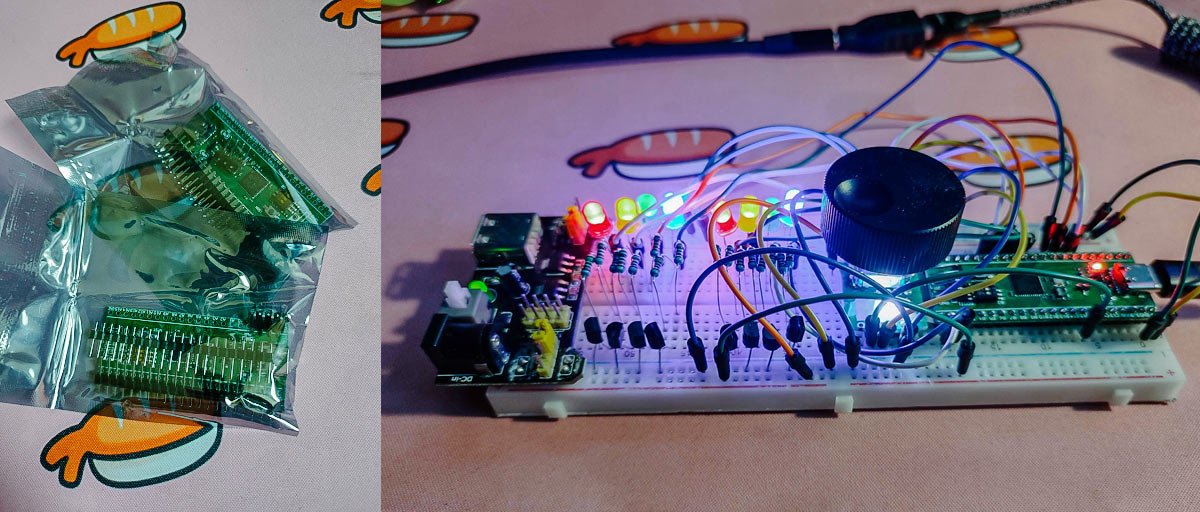

During my time at tonari, I had a lot of freedom to decide what to work on. I happen to have some hobbyist experience with designing simple PCBs, so I wanted to see if I could help in that area. tonari happens to roll some of its own PCBs for the hardware interaction components of the installation, namely the main volume dial and the lighting controls. The volume dial lets the user control the volume (as one would expect), and change the mode of the installation and choose whether to cast (share) additional content.

Unfortunately, due to the chip shortage, it has been difficult to find the exact STM32 microcontroller chip that tonari uses, so I went with a different version that was easily available on Amazon as a breakout board. Even then, I was pretty quickly able to set up my own hardware development station. I happened to have a lot of the components leftover from buying extra parts from AliExpress for my own mechanical keyboard builds, which made it incredible easy to just breadboard everything together. There were a few minor snags, but overall getting set up with the embedded Rust firmware was an absolute breeze.

Over the course of my internship, I was able to swap off between both my main project and the firmware/PCB side of things. The firmware work was pretty much cleaning up the colors and animations to match up with tonari's other UX elements. With the PCB work, I redesigned the volume dial PCB to work with newer components, redrew the footprint to allow for easier integration into tonari than the current one, as well as matched them better with their datasheet specifications for enhanced reliability. All of this was done in KiCAD, an easy-to-use open-source PCB design tool that we use at tonari to design our in-house printed circuit boards.

It's All About Balance

What made this internship really work was how tonari as a team made it easy to find balance. The codebase is small enough and organized enough that I could practically go where ever I wanted and get acquainted in a few days at most. But more importantly, I had the freedom to work across a variety of different issues and projects that spanned across completely different parts of the application. While the Video Codec SDK wrapping was my main project, it never really felt like I was limited to working only on that part of tonari. It's incredibly refreshing to be able to swap to thinking in a completely different paradigm for a bit while still feeling like I'm making progress on something that forms a core part of the tonari experience.

The culture at tonari has been just as important to me. My general impression of startups before tonari was honestly pretty terrible: I have a friend who interned at a startup who was overworked and taken advantage of under the guise of "internship work." I've seen startups that say that you could work 40-hour weeks, but strongly recommend working 50 hour weeks or even more because that's what all their employees do because they're "so passionate" about their work. The team culture at tonari is a rather large departure from those vibes, and it's an incredibly refreshing experience to be immersed in.

tonari has one-on-one meetings internally, which are meetings with anybody at tonari (not just inside the dev team) to talk about anything you want, whether that's work-related or life in general. Something that I realized pretty quickly while having my first one-on-ones with everyone was that most of the team is past their 20s, with families to take care of and a life outside of work. I'd say that's usually not what most people would expect a startup to look like. Fridays are even specifically super flexible to make it easier for people to take 3-day weekend vacations. When you're sick or overwhelmed, it's almost expected for you to take time off so you can recuperate. That's not to say that the work at tonari is boring in any way. Instead, it makes it clear to me that the focus at tonari is on the people who have built it from the ground up.

Thanks!

I'd like to give special thanks to my mentor Brian Schwind and supervisor Ryo Kawaguchi, especially for providing me tons of support and assistance with my projects and the internship in general. Also, a big shout out to everyone at tonari for being super welcoming, easy to talk to, and awesome people in general! And of course, thanks to you for reading, and hopefully there's something here that you can take away. 🙂

If you’re interested in our product and team, you can learn more about tonari on our blog and website, and follow new developments via our monthly newsletter.

Find us 💙

Facebook: @heytonari Instagram: @heytonari X: @heytonari