Editor’s note: this was written around Halloween, hence the spooky theme. 👻

“Any sufficiently advanced technology is indistinguishable from magic.” - Arthur C. Clarke

tonari is the connection of two spaces.

It might sound like a marketing truism, but there's much hidden in that concise statement. It is the simplest way I've come to understand this unique, mysterious oddball of a product in the three years I've worked on it. While not at all an official slogan, I believe it speaks of the design ethos that makes tonari feel unlike technology, but like magic.

As with most meaningful statements, it's also about what it doesn't say. So if tonari is the connection between two spaces... What is it not?

It's definitely not the time you flush down the swirly vortex of "loading" animations while waiting for a video call to start. It's not your own tired face, staring back from the virtual mirror as you join the daily standup, wondering what whimsical animation to overlay on your messy background. tonari is not the maze of invitations, addresses and links you navigate every day, coffee in hand, in the tedious chase of someone else's time.

No, tonari is none of that. Because all of these things, which we've come to accept as necessary evils of communication, have nothing to do with the connection between two spaces. All of these extraneous elements create friction, and this friction, in turn, draws the spotlight from connecting spaces to connecting people. And when you're connecting people, you inevitably feel the tension associated to holding someone's attention; that awkward silence at the end of a call, that urge to end the meeting early as to not waste your colleague's time, that anxiety about your own appearance.

By shifting the focus from connecting people to connecting spaces, the tension disappears. Spaces outlive people. They were connected before you arrived and they'll remain connected when you leave. This solidity, quietly handled in the background by invisible technology, is what makes tonari feel like being somewhere instead of just with someone.

The unsung hero

So, to achieve that goal in Arthur C. Clarke's quote, how can you possibly simplify technology down to magic? Ideally, the user should be able to forget about all these little points of friction associated with remote presence. They shouldn't be responsible for adjusting the volume, ensuring the connection is available and reliable, or checking whether hopping to a different location disturbs an important meeting.

Here's where the "sufficiently advanced technology" side of the quote comes into play. These decisions can't be ignored or left to chance, so if the user isn't making them... Who is?

The invisible fairies that live inside the portal, of course!

The illusion of simplicity is held together by complex decisions made behind the scenes in real time. In particular, many of those decisions depend on whether someone is present in front of one or both of the portals at any given time, and on their relative distance to the screen. Several subtle and not-so-subtle aspects of the portal's behavior depend on it, including:

- When someone approaches the portal from the other side, local volume increases so they're easier to talk to, while keeping background noise at a minimum when the portal is unused.

- Presence in front of the portal delays the transition to low power mode at the end of the workday, so that late-night tonari sessions aren't interrupted too early.

- When choosing a location to hop to, locations appear as "In use" when there's presence on both sides, to ensure hopping doesn't cause a disturbance.

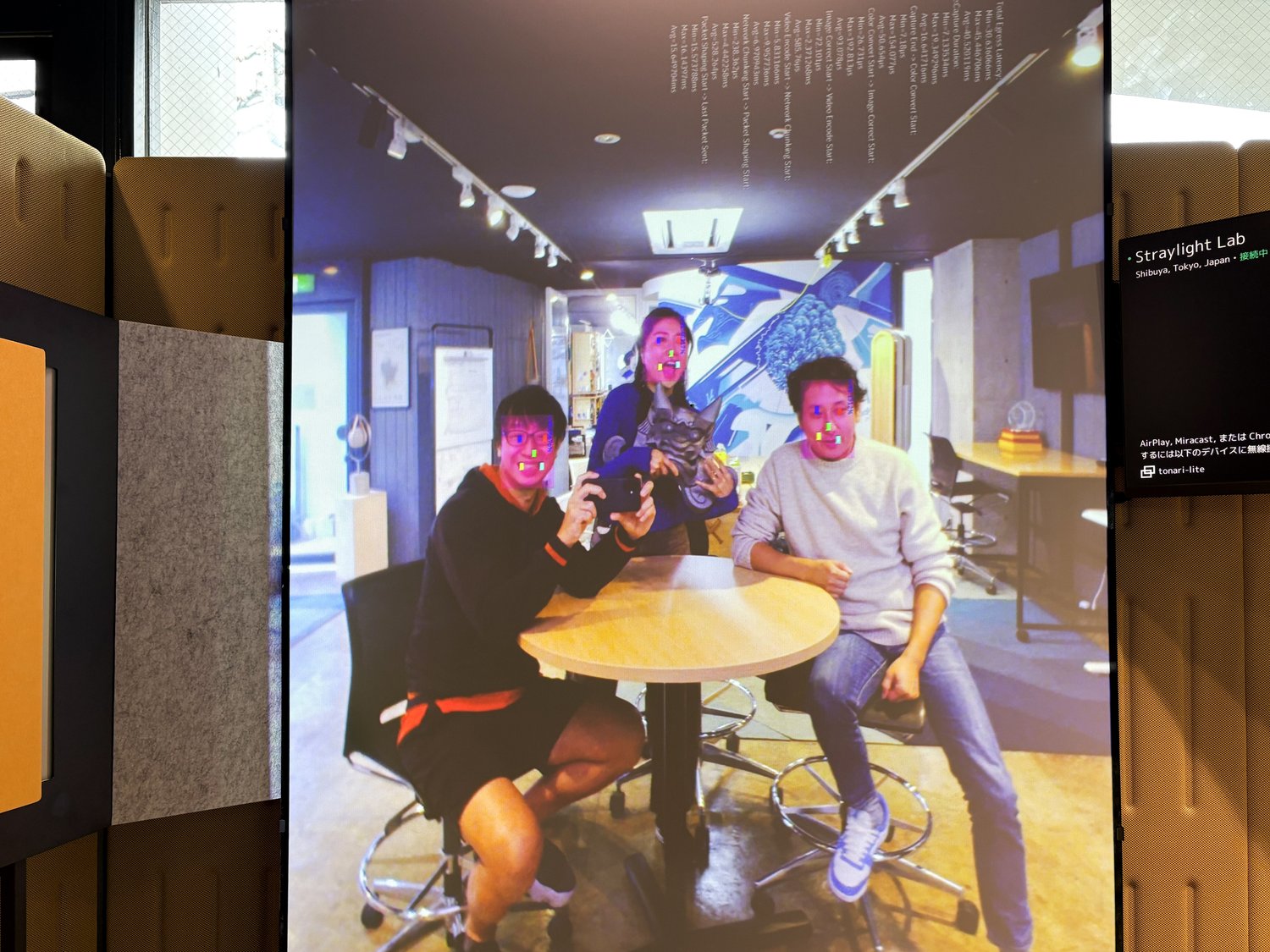

- When participating in a hybrid call — i.e. where tonari portals join a Zoom/Teams/Meet call — face position is used to dynamically adjust the zoom and create the perfect frame around every participant.

- Certain maintenance tasks are pushed back so they don't happen while the portal is in use.

Thus, early during the development of tonari, we identified we'd need to be able to detect the presence and position of people in front of each portal. We've gone through multiple iterations of this feature, involving a couple different AI-driven approaches, and this post will focus on the one we currently use in production. We'll do a quick tour over the way we detect and measure faces, offer you some juicy links if you want to deep dive, and there will be a prize at the end if you stick with us! (hint: we are open sourcing our face-detecting wrapper library!).

Now let’s detect some faces!

Detection VS recognition

Even if the HAL 9000 reference above wasn't ominous enough, I wouldn't blame the privacy-aware reader for being a bit apprehensive about face detection. When I first heard about our need for it, my mind immediately wandered to border controls, casinos, social credit, surveillance, and many other unsavory uses of this technology.

It's important to point out that tonari only does face detection, and it's completely incapable of any form of face recognition. This means the portals can tell when someone is standing in front, and deduce some basic metrics such as face rectangle size, and distance between major features such as eyes and mouth. There's neither a way for portals to recognize or remember specific individuals, nor a reason why we'd want them to. So if you ever find yourself alone in front of a portal and feel the urge to make mean faces at it, don't worry: it will forgive you, and it won't snitch.

Choosing the right model

Earlier forms of the detection algorithm used by the tonari portals didn't actually focus on detecting faces, but rather on detecting people. While there's some practical benefits to this, such as accurately sensing the presence of someone looking away from the portal, it did come with a few significant drawbacks:

- Detecting a human body in an arbitrary posture requires significantly more complex computation. This required running face detection code in the GPU instead of CPU, significantly affecting the performance of our graphics pipeline since tonari requires significant GPU-CPU memory transfer bandwidth.

- Generally, when it comes to portal usage, we do care about the direction the user is facing, so detecting bodies isn't as helpful as it might seem. Yes, people do take naps in front of portals. No, we really don't want to make the snoring louder!

- The bounding box of a (specific) human body can have arbitrary dimensions, which means it's very difficult to tell how far someone is from the portal by just inspecting the bounding box of their body, as it really depends on the posture.

When reworking this feature, we researched many different face detection libraries. Face detection is very much still an open question, with a myriad approaches and solutions, both proprietary and open source. Ultimately, we settled on YuNet, an extremely lightweight, CPU-based face detection model developed by Wei Wu, Hanyang Peng and Shiqi Yu, with a performant C++ implementation available on github.

Wrapping YuNet

YuNet is a C++ library which depends on OpenCV, a popular open-source computer vision framework. Fortunately for us, calling C++ functions from a Rust codebase is a problem that, while complex, can be solved with some amazing community tools. In this case, we used CXX, a crate (library), written by David Tolnay. The CXX crate allows us to seamlessly call C++ functions from Rust by defining some common constructs that are understood by both languages.

I won't delve into the details here, as this is not meant to be a technical walkthrough, but the Rust enthusiasts among you might enjoy taking a look at the wrapper directly, as we just open-sourced it. The important bit, and the only code we'll show here, is the definition of a face as understood by YuNet, interpreted as a Rust type:

#[derive(Debug, Clone, Serialize)]

pub struct Face {

/// How confident (0..1) YuNet is that the rectangle represents a valid face.

confidence: f32,

/// Location of the face in absolute pixel coordinates. This may fall outside

/// of screen coordinates.

rectangle: Rect<i32>,

/// The resolution of the image in which this face was detected (width, height).

detection_dimensions: (u16, u16),

/// Coordinates of five face landmarks.

landmarks: FaceLandmarks<i32>,

}

#[derive(Debug, Clone, Serialize)]

pub struct FaceLandmarks<T> {

pub right_eye: (T, T),

pub left_eye: (T, T),

pub nose: (T, T),

pub mouth_right: (T, T),

pub mouth_left: (T, T),

}As you can see, there's not much to a face. Saint Jerome may have said that the face is the mirror of the mind, but in our case it's just a box with five dots. Not too profound, but good enough for us! With the simple and clean definition of a face out of the way, all that's needed is to call a function with the following signature, and a freshly baked tray of faces will be served in exchange for raw image data.

pub fn detect_faces<T: ConvertBuffer<ImageBuffer<Bgr<u8>, Vec<u8>>>>(

image_buffer: &T,

) -> Result<Vec<Face>, YuNetError>

By calling the detect_faces function at a configurable frame rate, we achieve the real-time presence data we're looking for, with a negligible performance impact. The YuNet benchmarks weren't lying: the model is surprisingly fast and small, so it fits right into the tonari machinery much more snugly than other models we'd used in the past.

Beyond that, YuNet is a small, self-contained library, so rather than specify it as a dependency we decided to save a local copy of a specific commit, to ensure we don't need to worry about updates unless we found out about a safety, security or soundness issue.

Ghosts in the machine

A story about presence detection wouldn't be complete without a creepy campfire tale.

As a product designed to connect spaces at any time of the day—and night—tonari is delightfully prone to feature in ghost stories. We've had many experiences over the years ranging from the mildly paranormal to the definitely haunted, from ghastly screeches to brief spikes of inexplicable presence, and even unexpected flashes of long-forgotten locations, frozen in time.

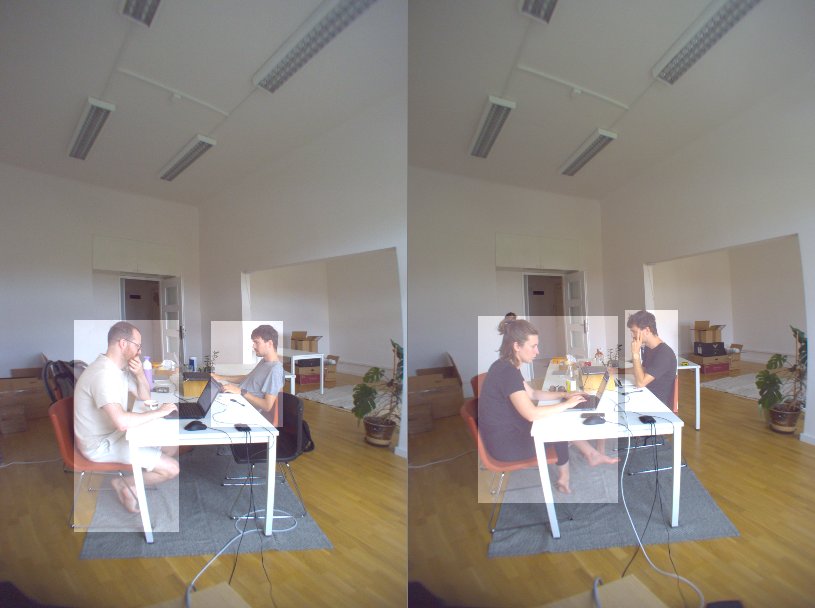

All that being said, our first experiences with YuNet were fairly uneventful, showing none of the spurious presence spikes we used to see with its predecessor, appropriately—and ominously—named Darknet. The only spooks we suffered during development were of the jumpscare variety, when we experimented with the zoom-to-face feature of hybrid calls. I'll let the video speak for itself:

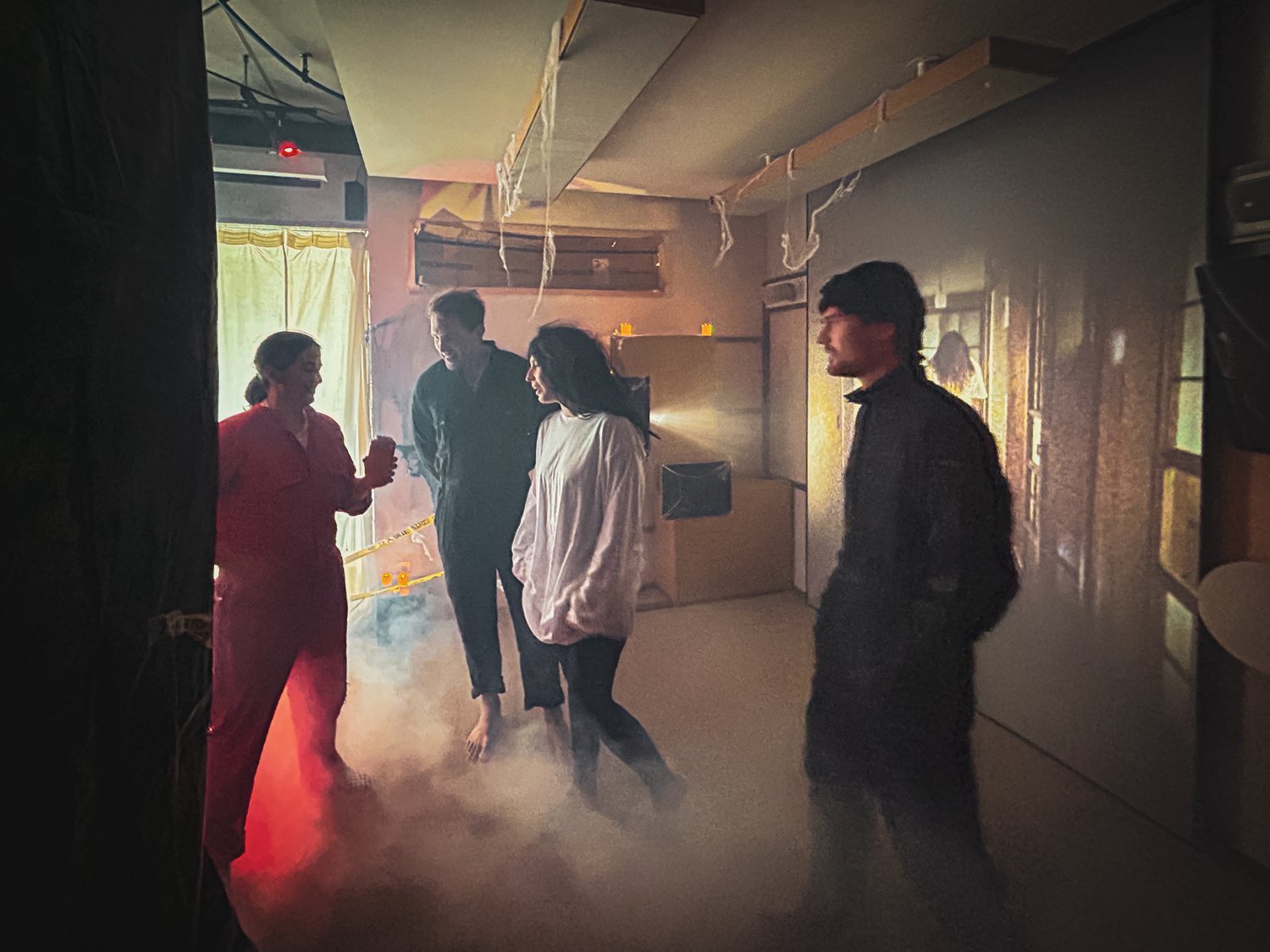

However, not long after we rolled YuNet face detection to production portals, we noticed one installation in particular had been kept awake all night, prevented from entering low power mode by a persistent presence. Intrigued, we checked with the customer, who turned out to be just as confused as we were. No, they knew of no diligent employee pulling an all-nighter. Not even a burglary would've explained the nightly presence, as it had been surprisingly still and stable.

We suspected an issue in our code, but seeing as we couldn't find the source of the bug, we requested the client an image of the scene in front of the portal. We can't quite share it for privacy reasons, but I hope you'll appreciate this reenactment:

And thus, the beast that kept tonari awake all night was revealed. That's the problem with faces: they are owned by the living... And also by the dead.

Call for ideas and alternatives

While YuNet has worked well for us thus far, the time we spent researching a Rust solution to the face detection problem was limited, and we're aware we might have ended up with an imperfect solution. If you have experience with either Rust or face detection, we'd love to hear your thoughts, especially if you have any hints on how to achieve face detection at sharper angles—when the subject is turned more than 90 degrees away from the camera.

We are always excited to get to know and meet like-minded people who are fascinated by what technologies can do and the beauty of Rust. Please reach us at hey@tonari.no or our team page if you are interested in working with us from Tokyo, Hayama or Prague.

And as promised, here’s the open source rusty-yunet wrapper that powers our face detection technology. If you like Rust and are excited to have your face detected, give it a go!

If you enjoyed this and want to learn more about tonari, please visit our website and follow our progress via our monthly newsletter. And if you have questions, ideas, or words of encouragement, please don't hesitate to reach out at hey@tonari.no. 👋

Find us 💙

Facebook: @heytonari Instagram: @heytonari X: @heytonari